TetNet - Evolving Tetris AI with Genetic Algorithms

TetNet (see: Tetris + Skynet) is a program that uses genetic algorithms to build an AI that can play Tetris.

View it in action! (Note: This will start the algorithm at generation zero. To see its evolved form, just press [ctrl] once it opens)

Go straight to the source on GitHub

How It Works

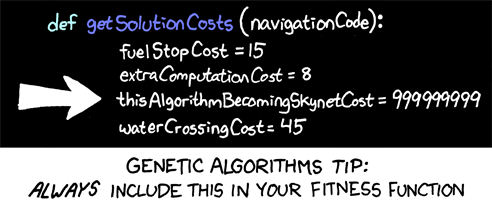

TetNet uses the tried and true method of genetic algorithms in order to create and refine the AI. Genetic algorithms work by creating a population of “genomes” that have multiple “genes”, representing parameters for the algorithm. Each of these individuals in the population is evaluated and a “fitness” score for each genome is produced. Like in real life, the fittest individuals (AKA the individuals with the highest fitness score) would go on to reproduce for the next generation and the genes that made these individuals fit would hopefully be passed down. Mutation also occurs, randomly editing genes in the children of the genomes in order to hopefully create more beneficial features. Each new generation should become fitter than the last, allowing the genetic algorithm to evolve to better solve the given problem (in this case, to get the highest score in Tetris in 500 moves).

Let’s break this down, shall we?

id: The unique identifier for the genome.

rowsCleared: The weight of each row cleared by the given move

weightedHeight: The weight of the “weightedHeight” variable, which is the absolute height of the highest column to the power of 1.5

cumulativeHeight: The weight of the sum of all the column’s heights

relativeHeight: The weight of the highest column minus the lowest column

holes: The weight of the sum of all the empty cells that have a block above them (basically, cells that are unable to be filled)

roughness: The weight of the sum of absolute differences between the height of each column (for example, if all the shapes on the grid lie completely flat, then the roughness would equal 0).

This genome represents an algorithm that will be used to “score” each possible move that could be made (for example dropping an I block into the 6th column). The values for each property represent the weight of each variable in the scoring equation. So for example, if the given move cleared 2 rows when fully executed, and the “rowsCleared” parameter equaled 0.5, then the result would be 1 (which would then be added against all the other variables multiplied by parameters in order to obtain the move’s score).

A “move” occurs when a new shape appears at the top of the grid. The genome scores each possible move by rating each move with genome’s parameters, and then looks at every move that could occur AFTER this move is made and rates that too. It can do this because Tetris tells the player what the upcoming shape will be, so the AI can use this to make sure that the current move works well with the next move to maximize score.

Of all the possible moves, the moves with the highest score (as determined by the genome) is executed and the process repeats until the genome either loses or hits the maximum number of moves allowed (in this case, 500). Once the game is over for the genome, the resultant score is used as this genome’s fitness score, which will later be compared against all of the other genomes in the population. The game is reset to the state it was at before the last genome was evaluated and the next genome is evaluated, until all the genomes in the population have a fitness score. Then, the population has evolved and a new generation begins (They grow up so fast!).

The Game

The game is based on the old retro Tetris rules from the NES. Each time a block moves down, the score is incremented by one (note: in the original games, this only occurred if you sped the shapes down). When a row is cleared, the score is increased by 400, 1000, 3000, or 12000, depending on how many rows are cleared at once (this models the scoring system of level 9 in the old Tetris games). The Tetris Wiki was my reference for these scoring parameters.

Emerging Behaviors

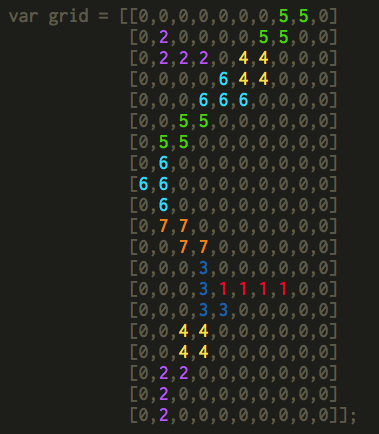

Generation 0:

At this point, all the genomes are randomly generated, so the AI doesn’t really know what its doing. This can lead to some fun results, including some genomes being created with positive height related weights resulting in them attempting to stack the shapes as high as possible:

Reach for the stars, genome number 23

These erratic behaviors obviously result in the game ending quickly (and so ending with a low score), so the genomes that produce these behaviors will most likely not pass their genes on to the next generation.

Generations 1 through 25:

rowsCleared: The weight of clearing rows remains relatively constant over time, between 0.3 and 0.4 over 25 generations. No elite genome (AKA the best genome from each generation) has a negative weight for the number of rows cleared, which makes sense as the score is most increased when rows are cleared.

weightedHeight: This parameter was added so that the algorithm would be able to detect if the blocks were stacking too high, and respond accordingly. Over the generations, the weighted height weight (…) also stays negative, ranging between -0.07 and -0.08 the entire time. This keeps the absolute height of the stacked blocks down, especially when they stack too high.

cumulativeHeight: Unlike the previous weights, the cumulative height weight varies greatly between genomes ranging from -0.4 to -0.8 over time. Either way, the cumulative height is always considered significant in almost every genome.

holes: As with most of the other parameters, this value remained negative for most of the time, hovering around the -0.1 range.

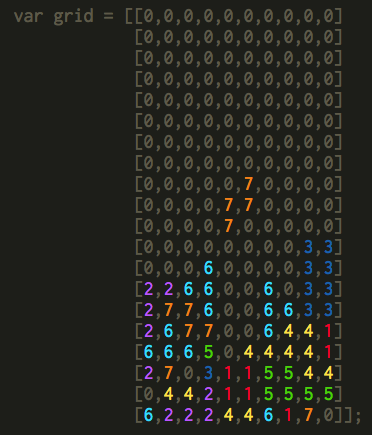

relativeHeight: This is where the algorithm surprised me. It seems like common sense that an algorithm meant to play Tetris would wish to keep the height low, and in the first elites that emerged, this ended up being the case with the relative height parameter staying around -0.12. But during generation 5, a mutation must’ve occurred that pushed the relative height weight parameter to a positive value of 0.5. From here on, the value settled to around 0.028 (though it sometimes spiked up or down). Here is my guess for why this occurred. In the beginning generations, the genomes that competed were focused on lasting as long as possible in order to continually increase their score as they attempted to use all 500 moves (the maximum amount of moves allowed per genome). This forced the genomes to play it safe, as they were not yet optimized properly and so had to worry about losing early on. But once they began to get close to the 500 maximum moves, they started to have to optimize more, in order to get as many points as possible in their limited amount of moves. This would pressure the genomes to evolve into risk takers, in order to capitalize as much as possible on the combo bonuses that occur when more than one row is cleared at once. Since relative height is the difference between the smallest column and the tallest column, the genomes would weight this positively so that they could build multiple rows of blocks with a single valley or two. Once the weighted height became too large, the valleys could be filled and multiple rows could be cleared at once (which can result in score bonuses that are over 10 times as great as clearing the same number of rows individually).

An example of the algorithm setting itself up for multiple clears at once

Conclusion

I have attempted to make TetNet three times in the past since freshman year of highschool, to no avail. This time, armed with more programming knowledge and a bit too much time on my hands, I finally succeeded. In the end, this was a fun experiment and I certainly want to try and see if I can make the algorithm any better. My next goal is to integrate the program with my neural network library cerebrum.js, so that more complex decisions can be made for as to where to place the next shapes. If you have any questions, suggestions, or are planning on doing this yourself and just want to know where to start, send me a message using my contact page and I will be sure to do my best to help!

References

Here are some sites that helped me when deciding which parameters to use for the algorithm:

Coding a Tetris AI using a Genetic Algorithm

Tetris AI - The (Near) Perfect Bot

http://cs229.stanford.edu/proj2015/238_poster.pdf

Want to get in touch and talk about one of my projects, opportunities, or just feel like chatting?

Feel free to comment below or email me at idrees@@example.comidreesinc.com

Check me out on Github: https://github.com/IdreesInc

Follow me on Twitter: https://twitter.com/IdreesInc

Reach out on LinkedIn: https://www.linkedin.com/in/idrees-hassan/

Or watch my videos on YouTube!